The playground of the mind was once populated with ideas fueled by books, stories and art. The raw material of today’s imagining has taken on a new and technology-mediated form. Some say that the impact of technology on individuals and society is greater now than ever. Others say that there has always been an impact of technology and we just think it is greater now because we are living now.

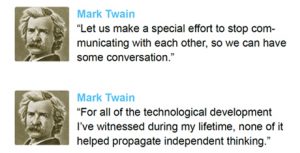

That contention is only part of a larger and longer-standing debate about whether technology has, for better or for worse, also diminished our ability to think for ourselves and form our own opinions. In 1898, the much admired American writer Mark Twain bemoaned the fact that for all of the technological development he witnessed during his lifetime, none of it helped propagate independent thinking. Were Mr. Twain alive today, he would be equally as disappointed at the current state of thinking.

Consider social media in the context of independent thought and the utterance, “I get my news and information from Facebook,” or “If it isn’t on Facebook, I don’t know anything about it.” One might think the speaker is a member of the digitalnative Millennial generation, who has probably never read a physical newspaper or watched the nightly news. Increasingly, though, it’s members of older generations making those statements.

Mischievously, one has to wonder if Twain would be on Twitter. Oh, the pith.

Anyone of any age who considers a social media platform like Facebook as a primary news source can hardly be called discerning. Is the information shared on Facebook balanced, much less accurate? Is the data supporting both sides of an argument shared and debated so that the reader can arrive at his or her own conclusion? Quite simply, no. Facebook is a treasure trove of sound bites, news snippets and opinion couched as fact. It is far easier for the reader to skim the headlines, assimilate what is found to be reasonable, and move on to a new topic rather than take the time to evaluate the available information and form an independent opinion.

What Facebook is to news and information, Pinterest is to creativity and imagination. At one point in time, our creativity and imagination were limited by our own ability to dream up something new, different, innovative or clever, or at the most, it was limited by the abilities of our friends and family to whom we would go for help. With the advent of Pinterest, we can simply go to the site, search for “dinner party,” and receive back a seemingly unending pool of ideas for creating an evening sure to be the envy of all. Rather than wracking her brain for impressive-but-inexpensive party decorations, delicious-but-easy meals, the perfect wine pairings, and parting gifts that will make the guests anxious to return for another celebration, the hostess can search Pinterest, find ideas she likes and replicate them. Simple? Yes. Impressive? Perhaps. Imaginative? Not so much.

For people who have neither the time nor patience to read the short posts on social media in order to inform their opinions, there’s another solution in the form of infographics.

Quite often, infographics are not only a beautiful way to communicate comparative or complex information, but also an effective way to increase comprehension of that information. Imagine the difficulty in describing with words alone the past 50 years of space exploration or the intricate detail in comparing Disney to Marvel, and you can understand the truth in the adage, “A picture is worth a thousand words.” However, just because a well-designed infographic can successfully communicate information in an understandable fashion doesn’t ensure that the reader thinks about the information presented. Rather, the reader quite possibly — probably? — sees the data, ingests it, and moves on without any evaluative process or thoughtful consideration. The idea surely has Mr. Twain ranting from the heavens above about the infographics’ ability to feed our mental laziness.

At the pinnacle of technological development in the past three decades is the ascendency of the Internet, arguably the most useful invention since the wheel and the most ubiquitous since sliced bread. With a world of information a mere keystroke or two away, the fruit of the tree of knowledge is abundant and ripe for the taking. It seems, though, rather than gorging on an unending bounty of information, the majority of society nibbles, samples and then tosses the bulk of the information away. In fact, instead of making us smarter, the Internet might be doing quite the opposite.

As writer Nicholas Carr observed in his 2008 article in The Atlantic titled, “Is Google Making Us Stupid?” the nature of the Internet is reducing our ability to think critically and with any depth. “What the Net seems to be doing,” writes Carr, “is chipping away my capacity for concentration and contemplation. My mind now expects to take in information the way the Net distributes it: in a swiftly moving stream of particles.” As the mind skims over the surface of information, it takes little time to plunge the depths that lead to critical, independent thought. It also takes little notice of the veracity of the sources from which the information comes, which leads the otherwise deliberate and diligent thinker to quote dubious sources such as Wikipedia.

With the advent of email, face-to-face meetings and phone calls could be avoided with the click of a button.

Technological developments have truncated our ability to think and imagine, but it doesn’t stop there. Technology has lessened our ability to converse and engage with others in a way that offers any kind of meaning or value. Rarely do we participate in long, impassioned conversations or even brief-but-meaningful exchanges. That kind of interaction with others has been replaced with electronic communication, and email was our gateway drug.

With the advent of email, face-to-face meetings and phone calls could be avoided with the click of a button. Entire conversations, business transactions and more have transpired purely through email. Texting arrived and exacerbated the situation, further eliminating the need for a phone call. With the ability to text, one didn’t need to wait for an emailed response when an immediate one was needed or preferred. Nor did one need to adhere to the tiresome convention of accuracy in spelling, grammar and punctuation, since the communication was undoubtedly — ahem — hurried.

The communications abyss cracked wide open with the launch of Twitter. Where texts are short, tweets are even shorter because of the social media platform’s 140-character limit. While the genius of Twitter provides anyone and everyone with the opportunity to broadcast their opinions, news and other information to anyone who cares to pay attention, its character limitation forced users into communicating in a way never before experienced. A message one might have transmitted through multiple paragraphs via email or through multiple sentences via text could only be shared via Twitter in one very brief, curtailed thought. Mischievously, one has to wonder if Twain would be on Twitter. Oh, the pith.

Although we are in the midst of rapid technological change, just as we were 200 years ago, 20 years ago, and will continue to be in all the years to come, those changes and the developments associated with them have done little to improve the individual’s ability to think independently or imagine fervently. Those changes haven’t fostered critical thinking or new methods of analysis, nor have they reinforced the weak skills we already possess. Technological change has simply redefined the formats and the parameters in which we think, imagine and communicate. But like the weather in Texas, if you don’t like it, just wait a little while; it’ll soon change again.